Glaucoma is the leading cause of global irreversible blindness, affecting more than 70 million people worldwide between the ages of 40-80. Tests to diagnose and understand the impact of the disease are well established, however the actual patient experience of glaucoma-affected vision has been confined to epidemiologic descriptions of function and imprecise visualization of what the patient sees. Many patients diagnosed with early-stage glaucoma are prescribed life-long therapies, yet they experience minimal visual distortions. The eventual, long-term impact of glaucoma on their activities of daily living and quality of life eludes them, reducing chances for treatment compliance. Furthermore, the limited visual depiction of the disease may prevent providers and family members from providing empathetic care and support.

Existing visualizations portraying the first-person experience of glaucoma suffer from methodological shortcomings. Most current representations are static, 2D images that do not correlate with patient-specific visual field (VF) impairment; these images do not capture or address the variability of vision loss and its effects on the patient's ability to decipher visual information. Moreover, most have not been derived from a systematic, patient-centered approach. Thus, there is a need for better methods to visualize disease from the patient perspective, and new ways to communicate that experience.

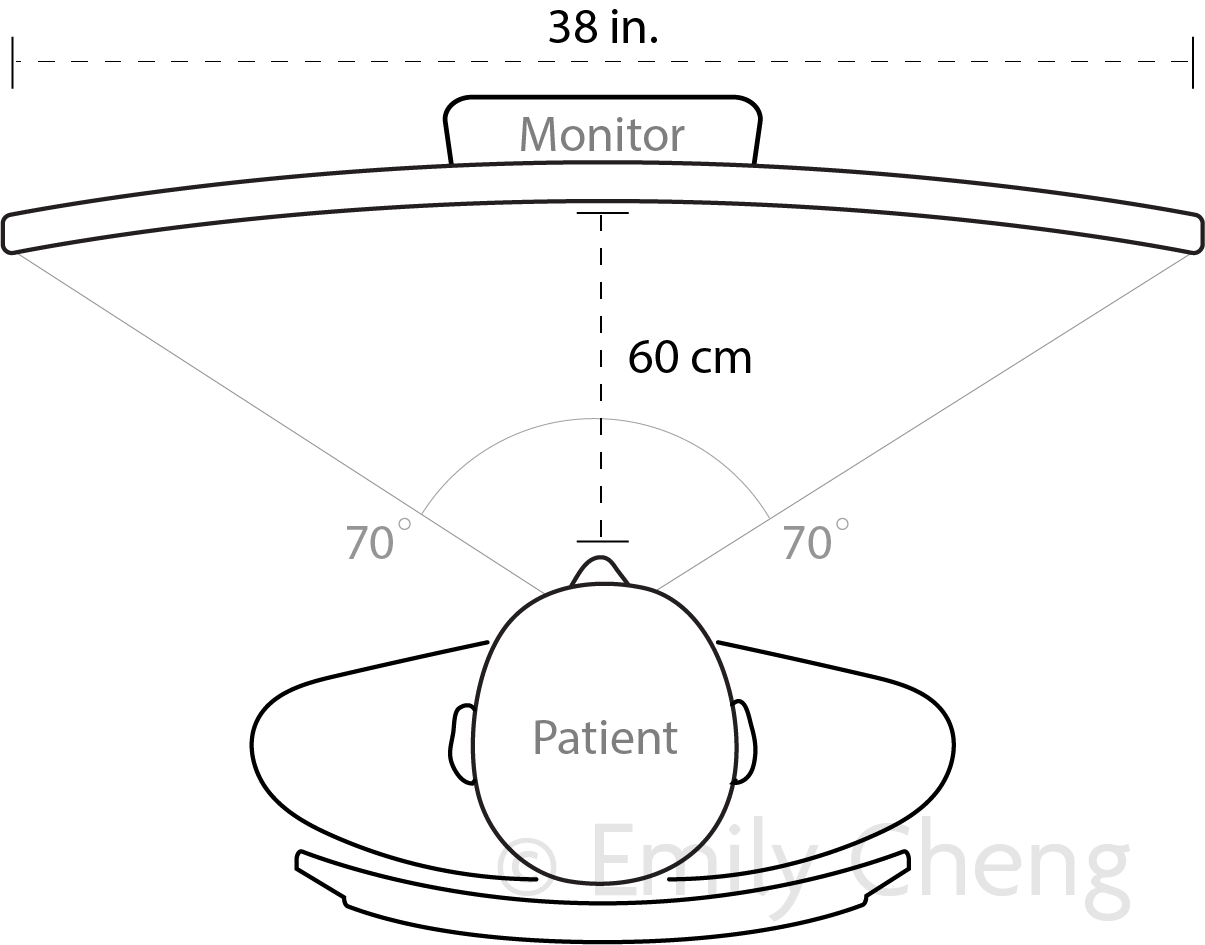

This research protocol accomplished these goals through a two-phased process: Phase 1 involved characterizing the visual experiences of several patients with unilateral, moderate to severe glaucoma via a series of custom eye assessments and interviews. Phase 2 depicted the resulting data through virtual reality (VR) eye-tracking technology in order to demonstrate dynamic aspects of the disease. The final VR application includes (i) a real-time video feed which represents to patients various glaucoma patient visual field loss patterns derived from our pool of characterized patient data, (ii) an immersive environment for visual search tasks with the option to toggle off representations of the disease state, and (iii) a patient education module with animations outlining the physiology of glaucoma, including links between disease pathology and findings in common tests used to identify and assess progression of disease.